Augmented Reality Tour for a Fraunhofer Facility

For the inauguration of the Fraunhofer Institute for Casting, Composite, and Processing Technology (IGCV) near Munich, I was commissioned to develop an Augmented Reality experience allowing guests to explore the soon-to-be-installed casting machines by seamlessly interweaving virtual content with the existing facilities of the technology center. This blog post highlights some key challenges and showcases the outcome of the project.

When inaugurating a new technology center, it’s nice to show guests and sponsors the plant in its final state. Complex equipment, however, often only arrives in the months following the launch of the plant itself, which led the Fraunhofer Institute for Casting, Composite, and Processing Technology (IGCV) to take an innovative approach: They hired me to develop an application enabling their visitors to virtually view their casting machines in their future positions in an Augmented Reality experience.

The first challenge in developing the AR app was the sheer size of the 3D models provided by the IGCV. Their suppliers provided CAD models of the plants which included every single screw, resulting in models with multiple thousand components. To decrease the complexity, I used the 3D-modeling software Blender to remove components that are too small for users to notice or not visible from the outside at all and converted the models into the OBJ format with PNG image textures using UV Mapping.

With the models ready for use and a Java-based prototype app using ARCore for environment tracking and OpenGL for rendering set up, I faced the challenge of accurately positioning the virtual casting machines in the technology center. To this end, I used ARCore’s Augmented Images functionality. The toolbox allows the app to detect a set of predefined images using the device’s camera and returns their estimated position and orientation in the world coordinate system of the ARCore tracking session. For accurate positioning and ease of use, I installed the visual tracking images on the floor of the technology center, just in front of the eventual position of the Augmented Reality models:

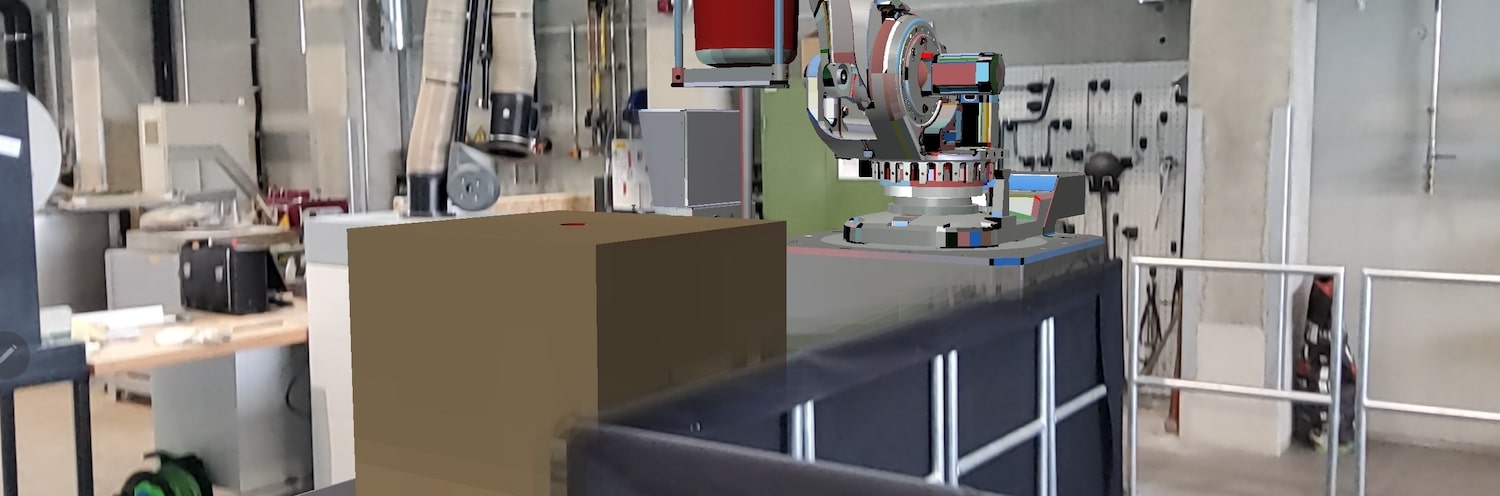

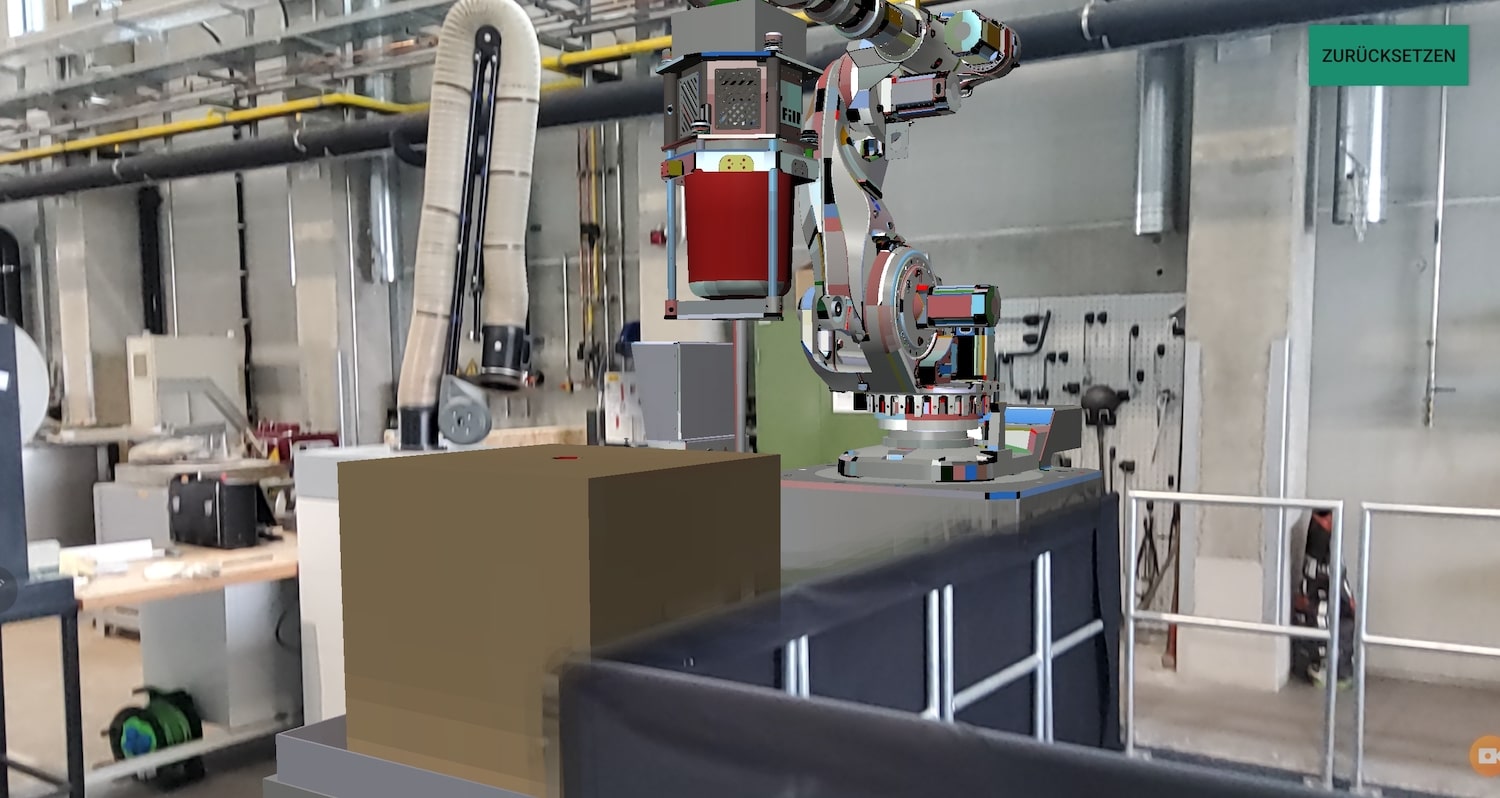

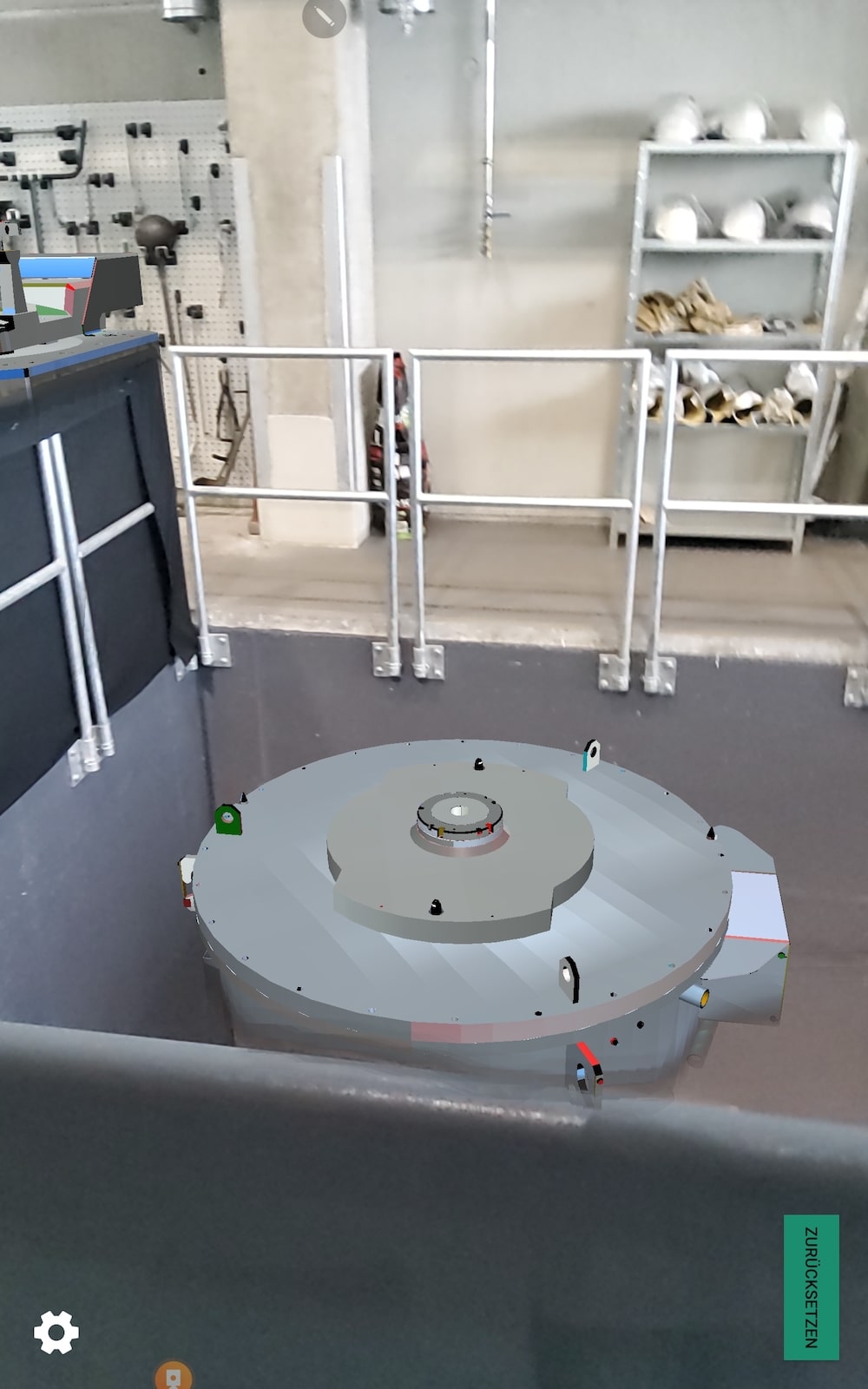

Hooked up with the Augmented reality app, the model could be displayed, providing an accurate impression of the future state of the IGCV plant:

Usually, the Augmented Images feature of ARCore is used to animate photographs or display virtual content directly around the tracked image. In this case, however, the image markers were merely used to obtain an accurate position in space while the actual models were placed multiple meters away from the marker. Combined with the fact that the models itself spanned up to 12 meters in size, usually unnoticeable misjudgments of the image anchor’s orientation had intolerable effects on the user experience: If the estimation of the normal vector of the detected image was slightly off, the whole model appeared crooked, floating in the air on one side and piercing the floor on the other.

To overcome this problem, I developed an algorithm for correcting faulty estimations of image anchor pose by utilizing ARCore’s plane tracking feature, more specifically, its capability to estimate normal vectors of planes detected in the environment:

In the above graphic, the red coordinate system displays a skewed estimation of the shown image anchor’s pose in the world coordinate system. Utilizing ARCore’s plane tracking, I was able to obtain a reliable estimation of the normal vector belonging to the plane on which the image anchor is placed, displayed in blue. Using geometric projections and transformations, I was able to rotate the initially estimated red coordinate system in a way that the normal vector matches the plane’s (blue) normal vector while the change in the coordinate system’s remaining axes is minimized. Using my algorithm, the AR application reliably obtained an accurate position and orientation from the image anchors while guaranteeing that the models are displayed upright and on the same level as the surrounding floor.

Combining the developed technology with an intuitive UI to guide non-tech-savvy users through the process of scanning planes, detecting the visual image anchor, and finally viewing the model, I managed to achieve an AR experience that seamlessly integrates the virtual casting machine into the technology center:

Please note that the video’s quality is heavily scaled down to optimize this blog post’s loading time.

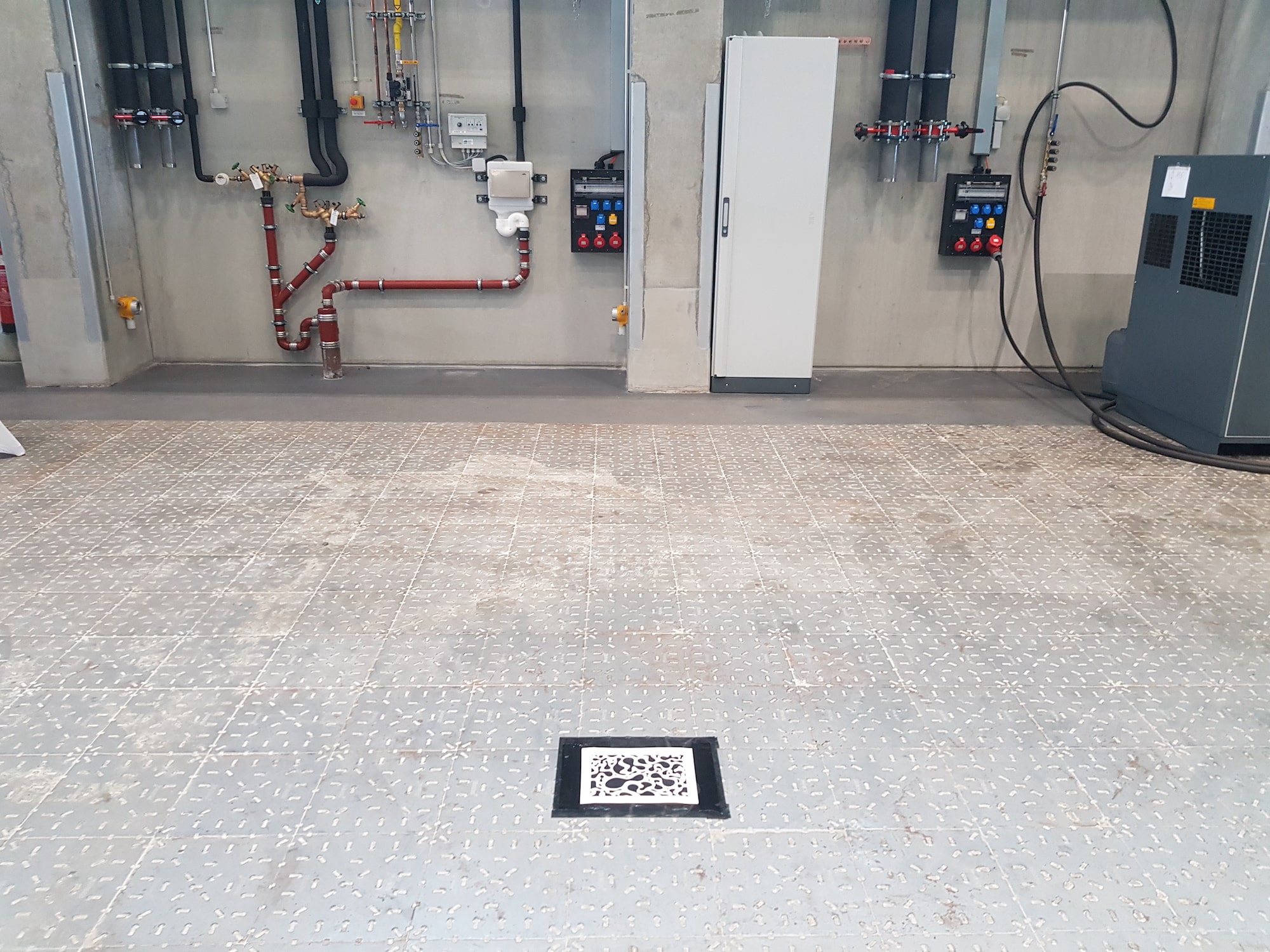

At this point, I turned towards an additional challenge arising from the placement of the second virtual casting machine:

The above image shows a railing surrounding a 2.5-meter deep pit. As you have probably guessed by now, the second AR model was supposed to be anchored to the bottom of the pit, leading to every AR developer’s most feared requirement: occlusions. To realistically display a model that both extends above and below ground level and is additionally surrounded by a railing, it is indispensable to render occluded areas and prevent users from being able to see through walls and floors.

To render occlusions, one needs to know how far objects are away from the camera. Basically, you need to know whether an object in the line of sight is in front of or behind the AR object you want to render, and only display the parts that are in front of environment elements. Obtaining these depth estimations is straightforward if the devices running the app have depth sensors like LiDAR or time-of-flight cameras, but for this project, single-camera tablets were used. Luckily, Google recently introduced the Depth API, an ARCore feature that allows computing dense depth maps from mono-camera input. Using the OpenGL renderer, these depth maps can be used to compute and render only parts of the model that should be naturally visible to the user and create a realistic experience.

The following video shows an overlay of depth information with the camera view and the AR model rendered in the pit, with red colors being surfaces close to the camera and blue areas being far away:

As you can see, the mono-camera depth estimation algorithm performs well in areas close to the camera and struggles with surfaces far away. This behavior is expected as the depth values are computed using triangulation, so the error gets bigger the farther away points are. For the AR experience, this uncertainty leads to parts of the model that are far away from the camera flickering as the algorithm is unsure whether or not the model is in front of or behind the physical scenery.

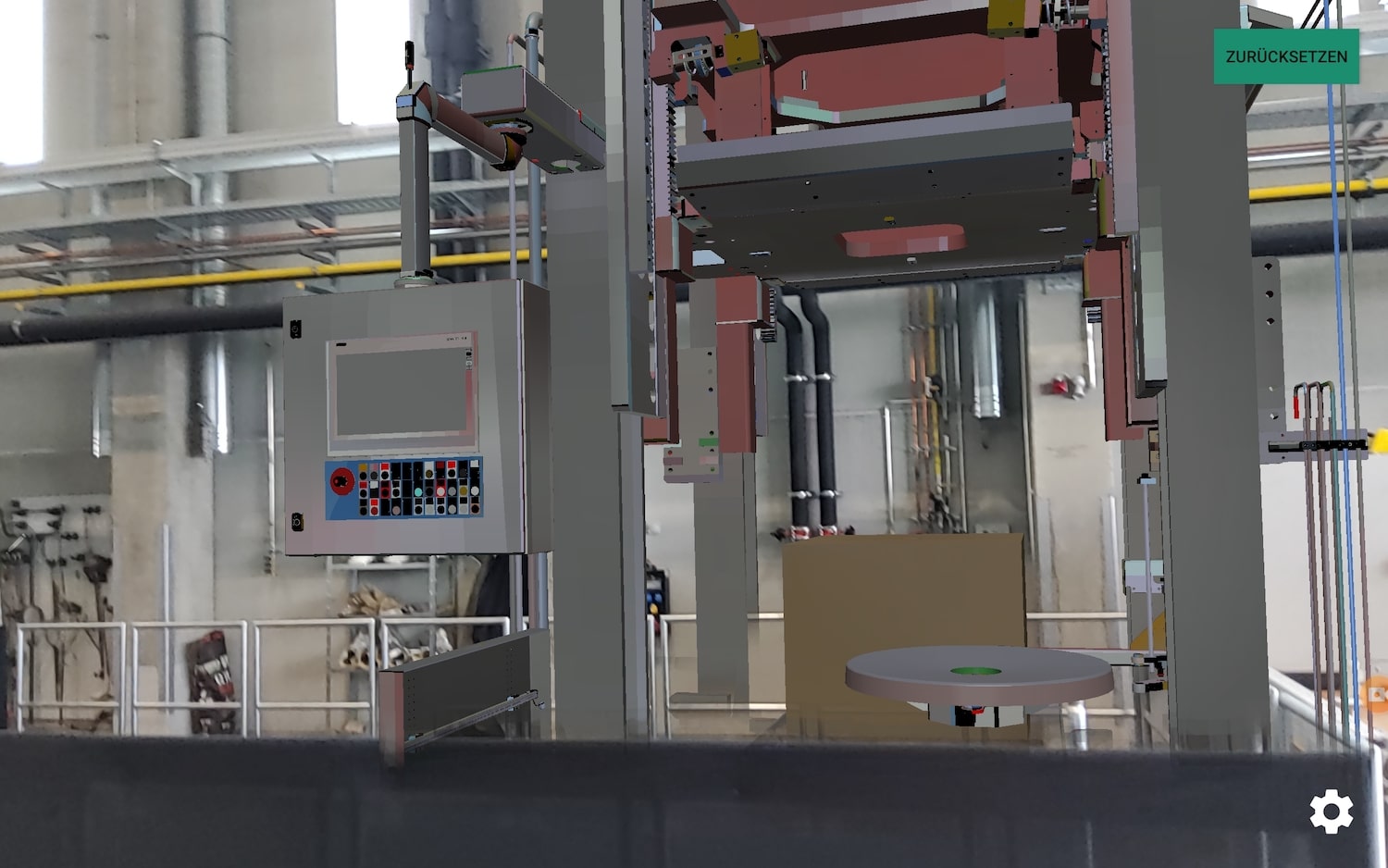

The following screenshots showcase the occlusion-enabled AR experience involving the casting machine placed in and around the pit:

When users walk towards the pit and look over the railing with their tablet, the parts of the machine inside of it are revealed in a natural way, offering seamless integration of virtual content with the real-world environment:

This final video is a screen capture of one of the tablets showing the final AR experience of the AR model with occlusion simulation. While there is still some flickering in far-away parts of the model, the overall experience is highly realistic and incorporates the virtual content into the physical environment in a natural and intuitive way. Again, note that the video’s quality is heavily reduced to optimize this blog post’s loading time.

Thanks for reading! If you or your company are thinking about using Augmented Reality as well, drop me a message and let’s discuss opportunities for you to profit from this fascinating technology!